Advances in 3D Object Recognition for Industrial Machine Vision Systems

Advances in 3D Object Recognition for Industrial Machine Vision Systems - Deep Learning Integration in 3D Object Recognition Systems

Deep learning is transforming how 3D objects are recognized, moving beyond traditional methods and utilizing the power of analyzing multiple viewpoints. This shift towards multiview 3D recognition allows systems to glean richer information about an object by considering its appearance from different angles. The introduction of multiview convolutional neural networks (MVCNNs) has been crucial in advancing this capability, allowing systems to better "understand" the complexity of 3D objects.

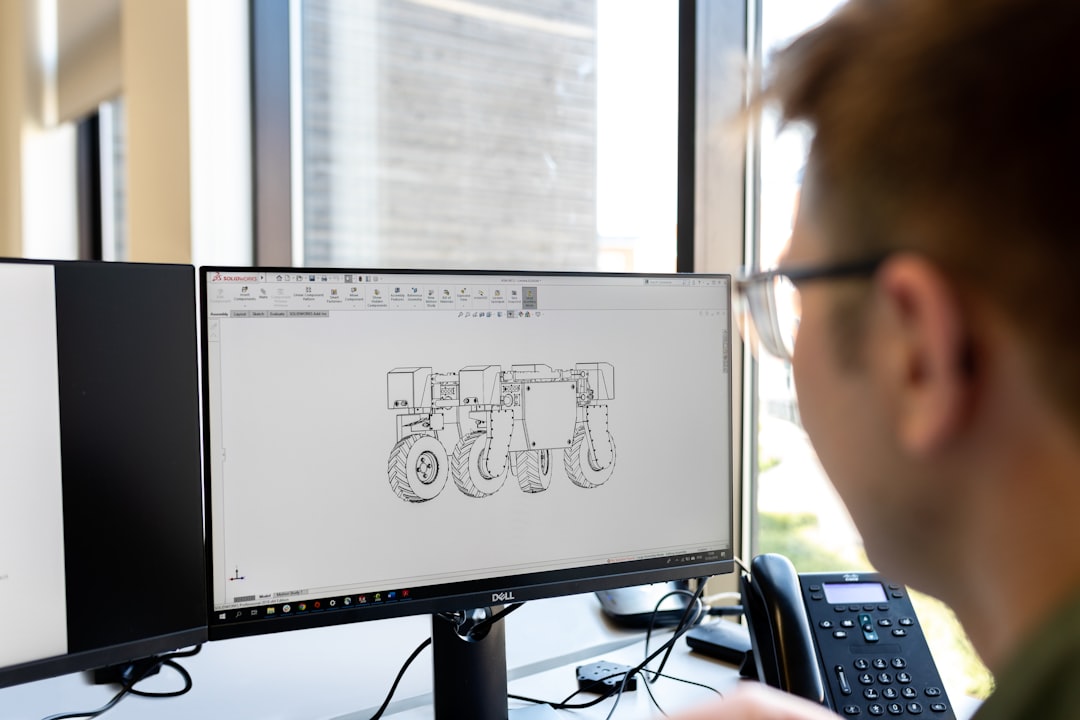

This approach isn't just about improving accuracy; it's also about streamlining the entire process. By combining deep learning with existing machine vision systems and 3D CAD models, we are seeing advancements in areas like recognizing features in machining processes. This fusion of technology reflects a broader trend towards integrating depth information in object recognition, making systems more accurate and efficient. However, despite the advancements, the field continues to face challenges in refining the process of 3D reconstruction and ensuring robust performance across diverse datasets.

Deep learning has made a real impact on 3D object recognition, going beyond simple feature extraction. We're seeing algorithms like convolutional neural networks (CNNs) used to analyze 3D point clouds, a step up from relying solely on geometric descriptors. This has really boosted recognition accuracy, especially in industrial settings.

Speed is key in those settings, and luckily, recent breakthroughs mean we can now process spatial information in real-time. This is crucial for dynamic environments, where quick responses are vital. We're even starting to see multi-view learning techniques take hold, allowing systems to analyze objects from multiple angles simultaneously. This helps reduce issues with occlusion, which can be a big problem with single-view approaches.

The ability to adapt pre-trained models from 2D datasets to work with 3D data through transfer learning is a game-changer. It means we can leverage existing knowledge, reducing the need for massive amounts of labeled 3D data for training.

Even data scarcity, a common challenge in 3D object recognition, is being tackled with generative adversarial networks (GANs). These networks can generate synthetic 3D models to augment training sets, helping improve system robustness.

Another interesting development is the use of contrastive learning. These techniques can embed 3D object features in lower-dimensional spaces, which speeds up retrieval and recognition without sacrificing discriminative power.

There's also a move toward using graph-based networks to analyze 3D data. This is promising because it lets us better understand the relationships between points in a point cloud, something that traditional grid-based methods often miss.

Deep learning models need to be reliable in industrial applications, and enhanced regularization techniques are helping to prevent overfitting, which can be a serious problem when dealing with variations in object shapes and appearances.

We're also seeing researchers explore the integration of sensor fusion techniques with deep learning models. Combining data from LIDAR, cameras, and depth sensors holds the potential to further enhance object recognition accuracy.

Despite all these advancements, interpretability remains a challenge. Understanding how deep learning models make decisions based on complex spatial features is crucial for building trust in these systems. We need to work toward making them more transparent and easier to understand.

Advances in 3D Object Recognition for Industrial Machine Vision Systems - Advancements in Time-of-Flight Technology for Industrial Applications

Time-of-flight (ToF) technology is becoming more powerful and useful for industrial applications, especially in the field of 3D object recognition and machine vision systems. These advancements are bringing about significant improvements in how these systems function.

New ToF cameras, such as the LUCID Helios2, are equipped with capabilities like high dynamic range (HDR) and high-speed processing modes. This allows for real-time depth imaging, which is crucial for dynamic industrial environments where things are constantly changing. Another innovation is the use of parallel structured light, which improves the ability to scan objects in motion. This is highly beneficial for automated processes like bin picking and object sorting.

The need for quicker data analysis is growing rapidly in industrial automation. To meet this demand, advanced ToF sensors are being integrated into systems, leading to more reliable performance and improved quality control during production. However, there are still some hurdles to overcome in terms of ensuring consistent accuracy across different operational conditions. This is driving ongoing research in sensor fusion and the development of more refined algorithms.

Time-of-Flight (ToF) technology is gaining traction in industrial applications due to its ability to measure distances with incredible accuracy. This accuracy stems from the way ToF sensors work: they send out light pulses and calculate the time it takes for the light to return, giving us a precise depth reading. For applications that demand strict tolerances, like manufacturing, this millimeter-level precision is a game-changer.

Recent developments in ToF technology have allowed for the creation of higher resolution sensors, capable of capturing intricate 3D images in real-time. This is huge for tasks like quality inspection, where detailed images are crucial for identifying even the smallest flaws. Imagine a robot performing visual inspection on a moving production line, analyzing for defects in real-time. That's where the power of high-resolution ToF imaging comes into play.

But ToF technology isn't just about capturing images; it's about leveraging the data we get from those images. These sensors are being increasingly paired with artificial intelligence algorithms, allowing for more sophisticated object detection and scene analysis. Think about it: instead of relying solely on human oversight in quality control, we could have AI systems analyzing ToF data to flag potential problems, potentially catching defects that might otherwise slip through the cracks.

However, there are challenges that still need to be addressed. One issue is the sensitivity of ToF sensors to environmental factors. Stray light, reflective surfaces, and even dust can interfere with readings, creating inaccuracies. Advanced filtering techniques are being developed to address these issues, but it's still a hurdle that needs to be overcome, especially in complex industrial settings.

On the plus side, modern ToF devices are increasingly working with infrared light, which is less affected by ambient light conditions. This opens up a wider range of possibilities for using these sensors in diverse environments, from dusty manufacturing floors to construction sites where lighting can be inconsistent.

Another positive development is the decreasing power consumption of ToF sensors. Advancements in technology have made it possible to create battery-powered ToF devices for mobile applications, such as drones used for warehouse management and inspection tasks. This gives us a lot more flexibility and expands the scope of where these sensors can be used.

The future of ToF technology is bright, with researchers developing miniaturized sensors that can be integrated into compact devices. Imagine having these sensors embedded in production lines, providing high-resolution depth readings without requiring large, cumbersome setups. This could revolutionize assembly line operations.

Multi-channel ToF sensing is another promising development, enabling systems to capture incredibly fast movements and dynamic scenes. This is essential for high-speed manufacturing processes where speed and accuracy are paramount.

The trend toward integrating ToF sensors with other technologies like LIDAR and RGB cameras is exciting. This combination of sensors creates a comprehensive perception system for applications like autonomous vehicles, potentially enabling them to navigate industrial environments safely and efficiently.

But while ToF technology is a powerful tool, its adoption in industry isn't without its drawbacks. Cost is a significant hurdle, and the specialized knowledge required to implement these systems can be a barrier for smaller businesses. These challenges need to be addressed for ToF technology to become truly widespread in industrial settings. Despite these challenges, the advancements in ToF technology show a great deal of promise for the future of industrial automation and other sectors. It's going to be fascinating to see how this technology continues to evolve and impact our world.

Advances in 3D Object Recognition for Industrial Machine Vision Systems - Multiview Convolutional Neural Networks in Manufacturing Processes

Multiview Convolutional Neural Networks (MVCNNs) have emerged as a powerful tool for improving 3D object recognition in industrial applications. By analyzing multiple 2D images of a 3D object, MVCNNs allow systems to gain a more comprehensive understanding of its shape and features. This approach is enhanced by techniques like view saliency, which identifies the most important visual information from each angle. MVCNNs have also tackled the issue of working with limited view data, enabling effective recognition even when only a few images are available.

The use of data transformations, like "jittering," further enhances MVCNNs by making them more robust to variations in object pose and position. This is particularly valuable in industrial settings where objects might be presented at different angles or in varying lighting conditions. In the realm of additive manufacturing, MVCNNs are demonstrating their value by analyzing time-series thermal images to monitor crucial process parameters like laser power and scan speed.

Despite the exciting progress, there are still challenges to overcome in order to fully realize the potential of MVCNNs in industry. One of the biggest obstacles is the need to account for variations in object representation, as objects might appear differently based on their shape, material, and the way they're presented to the camera. Ensuring that systems can accurately recognize objects across this wide range of possibilities is crucial for reliable performance. Another critical concern is interpretability. Deep learning models are often considered "black boxes," making it difficult to understand how they reach their conclusions. This lack of transparency can be a barrier to trust and adoption in safety-critical industrial environments.

Multiview Convolutional Neural Networks (MVCNNs) are a game-changer in 3D object recognition, particularly in industrial settings. They leverage the power of multiple 2D projections of a 3D object, providing a more comprehensive understanding of its shape and appearance, especially when occlusions are common. This multi-perspective approach can lead to a significant improvement in recognition accuracy, often outperforming single-view methods by a considerable margin. The advantage lies in their ability to capture richer information, enabling them to generalize well across different viewpoints and scales.

A key benefit of MVCNNs is their efficiency in handling data-scarce environments. They can synthesize information from various views, requiring less labeled data for training compared to traditional deep learning frameworks. This makes them particularly attractive in industrial settings where acquiring large amounts of labeled 3D data can be a significant challenge.

However, MVCNNs are not without their drawbacks. One significant hurdle is computational efficiency. Processing multiple views requires more memory and computing power, posing a challenge for real-time applications. Researchers are actively working to optimize these networks to make them more suitable for real-time industrial processes.

Despite these challenges, MVCNNs hold immense promise for improving defect detection accuracy. By analyzing anomalies from multiple perspectives, they can reduce false positives, ensuring the maintenance of product quality in high-speed manufacturing. This is crucial for minimizing disruptions in production and ensuring customer satisfaction.

The future of MVCNNs is promising, with researchers exploring innovative integration with reinforcement learning algorithms. This creates a more interactive system, allowing the model to not only recognize objects but also optimize actions based on previous predictions. This dynamic approach can lead to more intelligent and adaptable machine vision systems, capable of responding to evolving scenarios in real-time.

While MVCNNs offer a powerful tool for industrial applications, the challenges of noise and distortions in industrial settings remain. This is a crucial area of research, as the ability to handle these uncertainties is paramount for the robust and reliable implementation of these networks in real-world applications.

The path to widespread industrial adoption of MVCNNs lies in ongoing advancements in hardware, particularly specialized GPU architectures. These advancements will enable more efficient processing of large volumes of multi-view data, allowing MVCNNs to unlock their full potential for revolutionizing industrial automation.

Advances in 3D Object Recognition for Industrial Machine Vision Systems - LiDAR Integration for Enhanced Environmental Awareness

LiDAR, or Light Detection and Ranging, has emerged as a crucial technology for improving environmental awareness. Its ability to generate detailed 3D maps and accurately detect objects is revolutionizing various fields, including autonomous driving and industrial automation. When integrated with camera systems, LiDAR enhances real-time object recognition, making it invaluable for systems that need to navigate complex environments. For example, in self-driving cars, LiDAR helps detect obstacles and plan routes, while in industrial settings, it aids in precise object identification and manipulation.

One key advantage of LiDAR is its ability to function effectively regardless of varying lighting conditions, unlike traditional cameras. This robustness makes it ideal for dynamic environments where lighting can change quickly. Despite its growing popularity, LiDAR still faces challenges. Integrating sensor data from LiDAR with other sensors, like cameras, requires sophisticated algorithms and careful calibration. Moreover, ensuring the accuracy and reliability of these systems across diverse conditions, especially in complex industrial environments, requires ongoing research and development.

As LiDAR technology continues to evolve, we can expect even greater advancements in environmental awareness and safety. The future promises enhanced algorithms, more powerful sensors, and improved data processing capabilities, leading to more efficient and reliable systems for a wide range of applications.

LiDAR (Light Detection and Ranging) technology is revolutionizing environmental awareness in various industrial applications. Its ability to capture millions of points in a single scan provides incredibly detailed spatial information, surpassing traditional surveying methods in both resolution and precision. Unlike traditional imaging techniques that rely on light reflection, LiDAR utilizes laser pulses to determine distances to objects, creating a 3D representation of the environment. This allows for the differentiation of various surfaces, such as vegetation and buildings, which can be challenging for other imaging methods.

One of LiDAR's greatest strengths is its ability to function effectively in challenging weather conditions, including rain and fog, making it a more versatile tool than optical cameras. This reliability extends the frequency and accuracy of data collection for applications such as industrial and environmental monitoring. Moreover, LiDAR sensors can be integrated into diverse platforms, including drones, ground vehicles, and satellites, offering flexible data acquisition strategies tailored to specific industrial needs. This adaptability is essential for applications like infrastructure inspection and resource mapping.

The integration of LiDAR with machine learning is particularly promising for enhancing 3D object recognition. Machine learning algorithms can leverage the structured point cloud data generated by LiDAR, which contains detailed geometric information. This often leads to improved performance in distinguishing between complex objects in industrial applications, making LiDAR a valuable tool for tasks like quality control and automated sorting.

A major advantage of LiDAR data is its ability to generate highly accurate Digital Elevation Models (DEMs). These models are crucial for various applications, including urban planning, vegetation analysis, and even archaeology. They enable detailed topographical assessments, providing invaluable insights into the terrain and its characteristics.

However, despite its advantages, LiDAR data processing remains a significant challenge. Processing LiDAR data requires considerable computational resources, and optimizing these workflows is crucial for real-time applications. This area of research is actively being explored to overcome the computational bottlenecks and unlock the full potential of LiDAR in time-sensitive industrial applications.

LiDAR's ability to penetrate canopy cover makes it an invaluable tool for forestry and ecology studies. It can reveal ground-level features obscured by tree foliage, providing critical data for inventorying tree species and assessing forest health. This opens up possibilities for better forest management, conservation efforts, and ecological research.

The cost of LiDAR technology has been steadily decreasing, making it more accessible for small and medium-sized enterprises. Advancements in solid-state LiDAR systems, which offer lower production costs and increased durability, are expected to further drive the adoption of this technology.

An exciting trend emerging in the field is the fusion of LiDAR data with other sensors, such as RGB cameras and thermal imaging. This multi-modal approach allows for comprehensive environmental assessments by integrating data from various sources, enabling more informed decision-making in diverse applications. The future of LiDAR seems promising, with ongoing research aiming to improve processing efficiency, expand applications, and further integrate it into multi-sensor systems. As LiDAR technology continues to evolve, its impact on environmental awareness and industrial processes will likely become even more significant.

Advances in 3D Object Recognition for Industrial Machine Vision Systems - Non-Contact 3D Shape Measurement Techniques in Quality Control

Non-contact 3D shape measurement techniques are becoming increasingly essential for quality control in manufacturing. These methods offer a way to precisely assess intricate surfaces without the risk of physical contact, ensuring that high standards are met without damaging the objects being inspected.

Recent advancements have introduced sophisticated imaging techniques, such as stereo vision and structured light projection, which allow for the measurement of complex, large-scale surfaces with greater accuracy. The application of active optical techniques further enhances data acquisition through dense point cloud generation, providing comprehensive information for various purposes, ranging from production line quality assurance to medical testing.

While these techniques offer great promise, they also present challenges. Managing the vast amount of point cloud data generated can be complex, and the interpretation of this data within dynamic industrial settings can require further refinement.

Non-contact 3D shape measurement techniques are becoming increasingly vital in quality control. They offer a number of advantages over traditional contact methods, such as eliminating the risk of damaging delicate parts during inspection. Techniques like laser triangulation and structured light scanning allow for fast, highly accurate measurements without any physical contact.

The speed at which these systems can capture data is remarkable. Some systems can collect millions of data points per second, making them ideal for high-speed manufacturing environments. This rapid data capture allows for quick assessments, minimizing downtime and maximizing production efficiency.

These techniques are particularly valuable for measuring complex geometries that would be difficult or impossible to analyze using traditional methods. For example, they can accurately capture intricate surface textures or delicate details on a variety of materials, including highly reflective or transparent surfaces.

Another key benefit is their accuracy and resolution. Non-contact methods can achieve accuracies as fine as a few microns, meeting the stringent requirements of industries with tight tolerances. This precision ensures that quality control measures can meet both regulatory and operational standards.

These techniques can be seamlessly integrated with machine vision systems, enabling real-time monitoring of production lines. This integration allows for immediate detection of quality issues during manufacturing, enabling proactive adjustments to the production process.

Furthermore, these systems are adaptable to various industrial environments, including those with extreme temperatures or airborne contaminants. Their non-invasive nature makes them ideal for dynamic conditions where contact sensors might fail or introduce errors.

The latest advancements in non-contact measurement systems allow for color and texture analysis in addition to shape. This expanded capability enhances quality control by enabling the detection of inconsistencies in surface finishes that could indicate manufacturing defects.

The generated 3D models from these techniques offer valuable visual representations that aid in analysis and reporting. Engineers can easily visualize defects or deviations from specifications, making communication and collaboration between stakeholders more efficient.

Despite their numerous benefits, there are still limitations. One notable challenge is working with highly reflective surfaces, which can lead to measurement inaccuracies. This can often necessitate the use of additional software algorithms or specialized coatings to improve performance.

However, recent advancements in sensor technology are steadily addressing these challenges. The introduction of high-speed cameras and advanced algorithms has significantly increased the capabilities of non-contact measurement systems, continuously improving their precision, reliability, and integration with existing production systems.

Advances in 3D Object Recognition for Industrial Machine Vision Systems - Tailoring Vision Systems for Specific Industrial Part Detection

Tailoring vision systems for specific industrial part detection is about making them work perfectly for the job. As factories increasingly use 3D object recognition, it's becoming vital to have systems that can spot and check parts during the making process. By using powerful techniques like deep learning and structured light, these custom vision systems can get much better at quality control and finding defects. This lets you make systems that are perfect for each job, which improves how well things work and cuts down on mistakes. But, as these systems are built for specific jobs, it's important to figure out how to deal with things like parts being placed differently and changing conditions to make sure they work properly in real-world situations.

The world of industrial automation is continually evolving, and the way we detect and recognize parts is no exception. While deep learning has significantly changed the game, a key area for progress is in tailoring vision systems for specific industrial applications. These specialized systems need to be robust, adaptable, and accurate - things that are crucial for efficient manufacturing.

One approach is to customize neural network architectures to handle the specific types of objects we are interested in. Think about it: if you're working with tiny electronics components, your vision system should be optimized to detect those specific features. Adjusting parameters, like the number of layers or the type of activation function, can make a big difference in how quickly and accurately your system recognizes these objects. This is especially important in settings where you have a narrow range of parts and speed is vital.

Another powerful tool is transfer learning. It's like taking a pre-trained model that has seen a million objects and adapting it to our specific parts. The model already has a good grasp of visual concepts, so we can fine-tune it to focus on recognizing the specific objects we need to work with. This can significantly cut down on the amount of training data needed, leading to huge time savings and making the process more practical for niche applications.

But industrial environments aren't perfect. Things like reflections from shiny surfaces or uneven lighting can really mess with your vision system. This is where noise robustness comes in. Specialized algorithms are designed to filter out these distractions, making the recognition process more reliable.

We're also getting creative with generating synthetic data. Let's say you need to detect a new part, but you don't have a large collection of real images. With 3D modeling, we can actually create virtual versions of these parts, and then use them to train our model. This approach can effectively increase the size of our dataset, leading to a more generalizable and robust vision system.

The ideal vision system needs to adapt to changes. Parts might come in at different angles, or the lighting might fluctuate. Advanced systems are being designed to adjust in real-time to these variations. This adaptability is crucial for a fast-paced manufacturing environment, where traditional fixed systems often fall short.

And then we have feature extraction techniques, like HOG and SIFT. These help us recognize parts even when they're partially hidden or presented at odd angles. It's like giving the vision system a better understanding of the object's defining characteristics.

Combining 2D images with depth information is another game-changer. Using sensors like depth cameras or LiDAR, we can get a better grasp of the object's 3D shape, helping us differentiate complex objects. Think about picking up parts from a jumbled pile - the ability to perceive depth adds a whole new dimension to this task.

But sometimes, even advanced systems struggle with cluttered environments, where objects overlap. Here, segmentation algorithms come in, effectively separating objects from the background, allowing us to see them clearly even when they're surrounded by chaos.

And the best part? The solutions we develop for specific parts can often be scaled across an entire production line. With minor tweaks, we can adapt a system that recognizes one type of part to work with another. This is crucial for staying flexible in rapidly changing environments.

While fully automated systems are becoming more powerful, it's important to remember the human factor. Training these systems requires human expertise and careful attention, especially during the initial setup. And even with cutting-edge technology, engineers still play a critical role in the process, ensuring that systems are both accurate and reliable. This is especially crucial in high-stakes applications where failure isn't an option.

More Posts from whatsinmy.video: